In this first part of the tutorial we will become familiar with the most important concepts in CLAM. We will also experiment what working with a free framework (GPL) means, using the tools that are usually included in this kind of distributions.

To begin with, please point to CLAM's website (www.iua.upf.es/mtg/clam). In the "docs" section you will find all the publicly available documentation. Throughout this tutorial be sure to consult both the CLAM User and Development Documentation and the Doxygen documentation. While the User and Development Documentation covers most of CLAM's concepts and design, the Doxygen contains more detailed technical description of interfaces and such. The Doxygen documentation is derived directly from interface comments and because of this is, in general, more up-to-date than the User and Development Documentation.

After the introduction and your look at the documentation you should

be able to mentally answer the following questions:

Every open project should have an associated mailing list. Follow the link in the web and please add your name to the mailing list.

CLAM also has a bug managing section that uses the Mantis tool. Go to the "bugreporting" link in the web and add yourself as a user. Any bug you might find in CLAM from now on will have to be communicated to the CLAM team using this tool.

CLAM also uses third party libraries. Point your browser to the "links" section of CLAM's web and take a look at what each external library offers.

By now you should have decided whether you would like to do this tutorial in the Linux using GCC or Windows platform using Visual C++.

You should also have the CLAM repository correctly deployed in your

system. Look at the structure of the whole repository especially the

/src folder. It is interesting to become somewhat familiar with this

structure.

After the introduction we had in Part 1 of this tutorial we are ready to learn a bit more about the processing capabilities of the framework. To do so, we will work on one of the examples: the SMSTools2 application. This application uses the Spectral Modeling Synthesis scheme in order to analyze, transform and synthesize audio files. It is interesting enough to dedicate a whole session to its study. In the meantime, we will become familiar with more CLAM tools. You will find the files of this example in the /examples/SMS/Tools folder in the repository.

Now we can run and study the example. Note that the main class in the application is really a class hierarchy. We have the SMSBase class, where most of the functionality of the application is implemented. The SMSTools and SMSStdio classes derive from this, the former implementing the graphical version of the application and the later implementing the standard i/o version. We will choose to compile the graphical version so we will have a graphical interface created using the Fltk library.

You can use the xml file available in the example folder or edit the default configuration from the application. Note that there is a field in the configuration that points to the path of the incoming audio file that is going to be analyzed. You will need to modify the path so it points to a sound available in your local drive (this operation though can be directly done through the graphical user interface). You can use any of the following sounds: sine.wav, sweep.wav, noise.wav, 1.wav, 2.wav, 3.wav.

Now we can dive a little deeper into the code that implements this application.

First, take a look at the SMSBase class.

Now we will take a look at the SMSAnalysisSynthesisConfig class. It is the first time we are looking at a DynamicType class.

Most of the processing of the application is handled in the AnalysisProcessing and SynthesisProcessing methods of the SMSBase class. Note that in both cases we do more or less the same. We 'Start' the ProcessingComposite an then enter a loop that runs through all the audio and we call the Do method with the appropiate data. Finally we call the Stop method to "turn off" the ProcessingComposite.

Let's take a look at the SMSAnalysis class. This class looks like it should be very complex. All the analysis processing is done inside its Do method. But we see that the class does not have so many lines of code. This is because we are using the ProcessingComposite structure: the class is not much more than an aggregate of smaller Processing classes.

CLAM (as any development framework) has its own coding conventions: a set of rules and recomendations we should observe when writing CLAM-compliant code.

Now we are ready to implement our first CLAM application. It will be a very minimal application that will just be able to load an audio file into ram and then save it again using another file name.

First of all you must start a new CLAM project. Consult the CLAM

User and Development documentation's section on creating a new

CLAM-project for the platform you've chosen. For our first application

we will be using CLAM's Audio, AudioFileIn and AudioFileOut classes.

We will want to make our app a little more flexible (as we want to

extend it later on). So we now create a very simple user interface.

In this part of the tutorial we will focus on the study of the audio signal in the time domain and the tools that CLAM has for handling it.

To start with, we will work a little bit more on the application we designed in Part 3. We will insert an audio signal visualizer.

CLAM has a quite large and complex visualization module but we will only use one of its functionalities: the Plots..

Now we are ready to add a Plot in our application.

So you can very easily add audio output support to your application. You will have to compile all the .cxx files from the /tools/AudioIO folder and, in case you are compiling Linux the ones in /tools/AudioIO/Linux and, in case you are using Windows the files that implement the default RtAudio layer (all the files that start with Rt).

Next we will take a deeper look at the CLAM classes we have used until now. To start with, we will focus in the ProcessingData Audio class. It is a class with a quite simple structure but with some methods that are a bit more complex.

For reading/writing the audio files we are using the AudioFileIn and AudioFileOut classes from the src/Processing folder.

Before we continue with the tutorial,

we must

restructure our application a bit. Ultimately, we want an application

which

can analyze audio. This functionality of our application will be

encapsulated as a ProcessingComposites.

We must familiarize ourselves with the concepts of Processings,

ProcessingComposites

and Configs. Before continuing you should be able to mentally answer

these questions:

In the next part of the tutorial we are going to focus on the analysis part of our application. Now, we will create a new class called MyAnalysizer, which is derived from ProcessingComposite. (Note: to do so, we also need to create a Dynamic Type class to configure this class which is derived from ProcessingConfig). For now, you can have the class only print "I'm doing it!" in it's Do() method. The configuration class can only have a name field.

Add an instance of this class to your application class, and add a temporary item to the menu which calls MyAnalyzer::Do() in order to test it.

Possibly on of the major attratives of the CLAM library are its spectral processing capabilities. The following parts of this tutorial will focus on this domain while becoming familiar with more CLAM tools and ways of working.

First we have to talk about a very important ProcessingData: the Spectrum. Open the Spectrum.hxx file. It is a quite complex data class. Most of its complexity is due to the fact that it allows for its data to be stored in different formats.

In order to have a spectrum in our application, we will have to deal

with

the FFT. At the time of this writing, in CLAM we have three different

implemenations of the FFT: one based in the Numerical Recipes book

another that uses the new-Ooura, and the lastone that

uses the FFTW library from the MIT. This latter is the most efficient

and

is the one that is used by default. (If you need more information about

the FFTW GPL library you can go to fftw.org).

Now we will add an FFT to our analysis composite (MyAnalyzer) and we will add the "Analyze" option at the main user menu in our application. Once the user chooses this option we will ask for the FFT size. One of the problems we have to face is how to "cut" the input audio into smaller chunks or frames (for the time being they have to be the same size as the FFT).

To debug CLAM applications, we can use some of the tools available in the Visualization modules. But sometimes it is very interesting to generate an XML file with the content present in one of the objects that are in memory at a given moment. Most CLAM objects can be serialized to XML by calling their Debug() method (a Debug.xml file will be created), either explicitly in code or using a debugger. General purpose XML serialization/deserialization is provided by the XMLStorage class.

Now we can consult the content of our spectrum in XML and describe its main features.

As we have just seen, textual debugging is sometimes not the most convenient when trying to analyze the effect of a given algorithm or process. We will use a Spectrum Snapshot to be able to inspect the result visually.

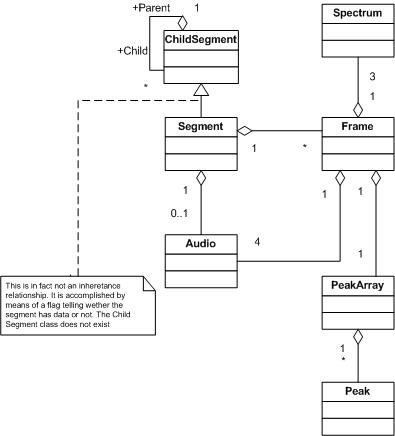

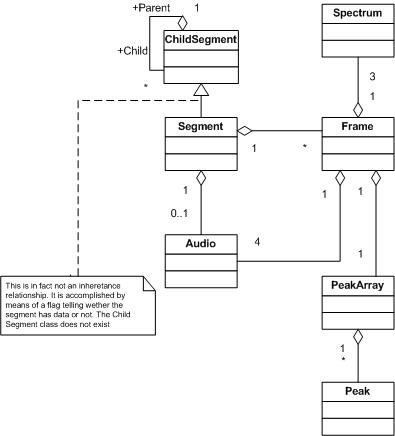

A frame is a short audio fragment and all the analysis data

associated

to it.

On the other hand, a segment is a longer audio fragment that is made

up

of a set of frames that usually share some common properties (they

belong

to the same note, melody, recording...).

The Segment class is one of the most important and complex ProcessingData classes available in the CLAM repository. Here you have an approximate UML static diagram of its structure:

Now we are ready to add the CLAM Segment to our application. We will keep every spectrum that is output from the FFT in a different frame in our segment.

When we take an audio chunk directly and we input it to the FFT

(like

we are doing now), what we are actually doing is to multiply the audio

chunk with a rectangular window. This means we are convolving the

spectrum

of our signal with the transform of a rectangular window. But the

transform

of this window is not very suitable for analysis. Other important

things related with the STFT we are not doing is the circular shift or

buffering centering, or the option of adding zero-padding to our

analysis.

We could do all these things by hand but in CLAM there is already a

ProcessingComposite that includes all the necessary functionality: it

is the SpectralAnalysis class.

One of the limitations we had in the previous part is the fact that

the approach used does not allow to have overlapping audio frames (

having a hop-size that is different from the window size). We could

solve this by hand using some tools in the CLAM repository. But to make

it more simple, we will use another Processing Composite: the

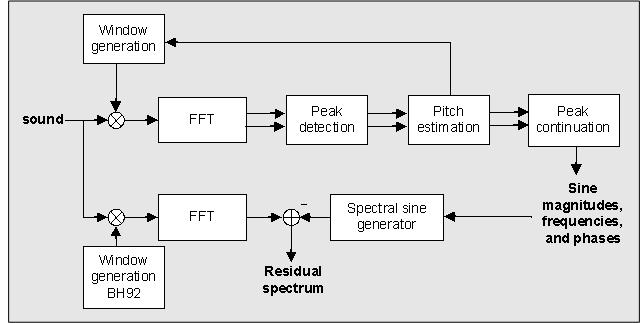

SMSAnalysis. And we will get for free the whole implementation of the

SMS algorithm including spectral peak detection, pitch estimation and

separation of the signal into sinusoidal and residual component.

Here is the SMS analysis block diagram:

Look at the SMSAnalysisConfig class and try to understand its main parameters.